Friday, December 14, 2012

Tuesday, November 20, 2012

Project5

Part1 Globe:

Part2 SSAO(Screen Space Ambient Occlusion):

gatherOcclusion:

float distance = length( occluder_position - pt_position );

float overhead = dot( pt_normal, normalize( occluder_position - pt_position ) );

float planar = 1.0 - abs( dot( pt_normal, occluder_normal ) );

return planar*max( 0.0, overhead )*( 1.0/(1.0 + distance) ) ;

regularSample:

vec2 occludePos = texcoord + vec2( i, j )*REGULAR_SAMPLE_STEP;

vec3 occPos = samplePos(occludePos);

vec3 occNorm = normalize( sampleNrm(occludePos) );

accumOcclusion += gatherOcclusion( normalize(normal), position, occNorm, occPos ) ;

Part3 Vertex Pulsing

float displacement = scaleFactor * (0.5 * sin(Position.y * u_frequency * u_time) + 1);

vec3 newPosition = Position + displacement * Normal;

Implemented

- Bump mapped terrain

- float center = texture2D( u_Bump, v_Texcoord + vec2( -u_time*0.8, 0.0 ));

float right = texture2D( u_Bump, v_Texcoord+ vec2( -u_time*0.8, 0.0 ) + vec2(1.0/1000.0, 0.0) );

float top = texture2D( u_Bump, v_Texcoord + vec2( -u_time*0.8, 0.0 )+ vec2(0.0, 1.0/500.0) );

vec3 perturbedNorm = normalize( vec3(center - right, center - top, 0 .2) );

vec3 bumpNorm = normalize(eastNorthUpToEyeCoordinates(v_positionMC, normal)* perturbedNorm);

float BumpDiffuse = max( dot(u_CameraSpaceDirLight, bumpNorm), 0.0 );

- Rim lighting to simulate atmosphere

- Nighttime lights on the dark side of the globe

- Specular mapping

- Moving clouds(from west to east)

- Orbiting Moon with texture mapping

Part2 SSAO(Screen Space Ambient Occlusion):

gatherOcclusion:

float distance = length( occluder_position - pt_position );

float overhead = dot( pt_normal, normalize( occluder_position - pt_position ) );

float planar = 1.0 - abs( dot( pt_normal, occluder_normal ) );

return planar*max( 0.0, overhead )*( 1.0/(1.0 + distance) ) ;

regularSample:

vec2 occludePos = texcoord + vec2( i, j )*REGULAR_SAMPLE_STEP;

vec3 occPos = samplePos(occludePos);

vec3 occNorm = normalize( sampleNrm(occludePos) );

accumOcclusion += gatherOcclusion( normalize(normal), position, occNorm, occPos ) ;

Part3 Vertex Pulsing

float displacement = scaleFactor * (0.5 * sin(Position.y * u_frequency * u_time) + 1);

vec3 newPosition = Position + displacement * Normal;

Friday, November 9, 2012

Image Processing/ Vertex Shading

Video

Pat1

Original picture:

Fetures implemeted:

Optional features:

Pat1

Original picture:

Fetures implemeted:

- Image negative: vec3(1.0) - rgb

- Gaussian blur: GaussianBlurMatrix = 1/16[[1 2 1][2 4 2][1 2 1]]

- Grayscale: vec3 w = vec3(0.2125, 0.7154, 0.0721); luminace = dot(rgb, W);

- Edge Detection: Sobel-horizontal = [[-1 -2 -1][0 0 0 ][1 2 1]]; Sobel-vertical = [[-1 0 1][-2 0 2 ][-1 0 1]].

- Toon shading

Optional features:

- Pixelate: define the pixel size(by using the picture size); Get the new coordinates by6 multiply the pixel size with the pixel index. Pixel indices are calculated by dived the original texture coordinates by pixel size.

- Brightness: u = (rgb.r + rgb.g + rgb.b)/3;

- Contrast: (m-a)/(n-a) vs m/n

- Night Vision: Only multiplied by green color;

Part2:

Sea wave:

float s = sin(pos.x*2PI + time);float t = cos(pos.y*2PI + time);height = sin(sqrt(s^2 + t^2 ))/sqrt(s^2 +t^2 );

Tuesday, November 6, 2012

Raterizer -- some triangles are missing

Optional feature I chose are:

- backface culling

- interactive camera

Back-face culling

Video:

Friday, October 12, 2012

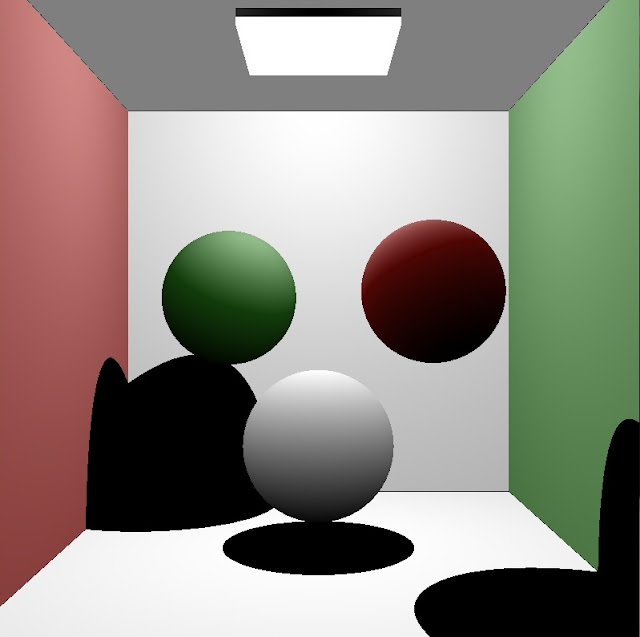

Path Tracer--Got basic features right

- Anti-aliasing:

I don't know why there are white lines on the white sphere.

- Depth of Field:

- Short Demo:

Path tracer--Got the color right

I made mistakes in the Accumulate function for color accumulation.

Here is the corrected one rendered scene I got:

Here is the corrected one rendered scene I got:

Tuesday, October 9, 2012

Path Tracer -- Always get flat shading

- calculateBSDF

- calculateFresnel

- calculateTransmissionDirection.

- stream compaction

The problem is that in remove_if function I accidentally remove all the rays that need to continue tracing. For the colors, that is because I used plus instead of multiply.

Correctted that I got this:

Error : expected an identifier

error : expected an identifier C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v4.0\include\thrust\device_ptr.h 286

the problem turned out to be with the inclusion of the thrust headers themselves. Even just including thrust/device_ptr.h threw up errors. For some reason, the thrust headers have to be included before all the other 'C' headers in this project.

the problem turned out to be with the inclusion of the thrust headers themselves. Even just including thrust/device_ptr.h threw up errors. For some reason, the thrust headers have to be included before all the other 'C' headers in this project.

Sunday, September 30, 2012

Pushing to Git returning Error Code 403 fatal

Pushing to Git returning Error Code 403 fatal: HTTP request failed

Why:

Github seems only supports ssh way to read&write the repo, although https way also displayed 'Read&Write'. So you need to change your repo config on your PC to ssh way:

Solution:

Why:

Github seems only supports ssh way to read&write the repo, although https way also displayed 'Read&Write'. So you need to change your repo config on your PC to ssh way:

Solution:

- Manually edit

.git/configfile under your repo directory. Findurl=entry under section[remote "origin"].Change all the texts before@symbol tossh://git. Saveconfigfile and quit. now you could usegit push origin masterto sync your repo on GitHub -

Another solution is just use shell command:

git remote set-url origin ssh://git@github.com/username/projectName.git

Interactive Camera and specular reflection

- Interactive Camera

Keys:

'W': move forward(in Z direction)

'S': move backward(in Z direction)

'A': move to left

'D': move to right

'U': up

'I': down

- Specular Relection

Reflection not right:

Without reflection:

Wierd Pattern on the Back Wall

- Raycasting from a camera into a scenen through a pixel grid

- Phong lighting for one point light source

- Diffuse lambertian surfaces

- Raytraced shadows

- Cube intersection testing

- Sphere surface point sampling

Finally, I found out why. It is because I used EPSILON in the box-ray intersection set when the ray parrelle with one plane. EPSILON is defined as 0.000000001, which seems too small for a float number camparation. I have changed it to 0.001.

Now, the picutre looks like this:

Friday, September 28, 2012

Raycast and box-ray intersection

Basic raycast from camera and box-ray intersection finished. At this point, I can get a 2D picture now.

- Raycast:

Raycast direction test:

Here is a screen shot of one of the images I got,flat shading:

- Box-ray intersection

Thursday, September 27, 2012

CUDA GPU Ray Tracer

This is a course project for CIS 565.

Useful link: http://en.wikipedia.org/wiki/Ray_tracing_%28graphics%29

Useful link: http://www.siggraph.org/education/materials/HyperGraph/raytrace/rtinter3.htm

Useful link: Wolfrat Math World: http://mathworld.wolfram.com/SpherePointPicking.html

Ray Tracing

Basic Algorithm: For each pixel, shoot a ray into the scene. Check intersections for the ray. If intersection happens, cast a shadow ray to light source to see if the light source is visible and shade the current puxel accordingly. If the surface is diffuse, the ray will stop there. If it is reflective, shoot a new ray reflected across the normal from teh incident ray. And repeat over until reached the tracing depth or the ray hits a light or diffuse surface.

Folowing is a picture demonstrates how ray tracing algorithm works.(grab from wikipedia)

Since CUDA does not support recursion, we need to use Iterative Ray-tracing.Useful link: http://en.wikipedia.org/wiki/Ray_tracing_%28graphics%29

- Box-ray intersection

Useful link: http://www.siggraph.org/education/materials/HyperGraph/raytrace/rtinter3.htm

- Sphere surface point sampling

Useful link: Wolfrat Math World: http://mathworld.wolfram.com/SpherePointPicking.html

Subscribe to:

Posts (Atom)